NVIDIA’s brand-new open-source toolkit allows designers to include topical, security, and security functions to AI chatbots and other generative AI applications constructed with big language designs.

The software application consists of all the code, examples, and paperwork organizations require to include security to AI apps that create text. NVIDIA stated it’s launching the task given that numerous markets are embracing big language designs (LLMs), the effective engines behind these AI apps.

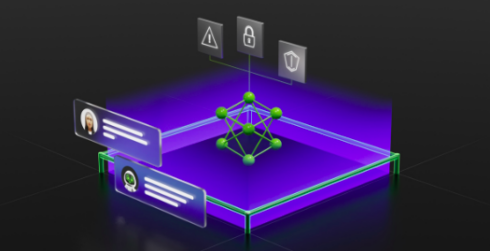

Users can establish 3 sort of limits with NeMo Guardrails: topical, security, and security.

With topical guardrails, apps can be avoided from entering into undesirable locations by executing topical guardrails. A circumstances of this is avoiding client service assistants from reacting to queries concerning the weather condition.

Security guardrails guarantee apps react with precise, proper info. They can filter out undesirable language and impose that recommendations are made just to reputable sources.

Security guardrails limit apps to make connections just to external third-party applications understood to be safe.

The tool works with the tools that business app designers frequently utilize. For instance, it can operating on LangChain, an open-source toolkit that designers are easily welcoming to include third-party applications with LLMs. Additionally, NeMo Guardrails is created to be flexible adequate to work with a wide variety of LLM-enabled applications, consisting of Zapier.

The task is being included into the NVIDIA NeMo structure that currently has open-source code on GitHub.