Xu Hao makes use of chain of concept and normal wisdom prompting with ChatGPT when writing self-testing code

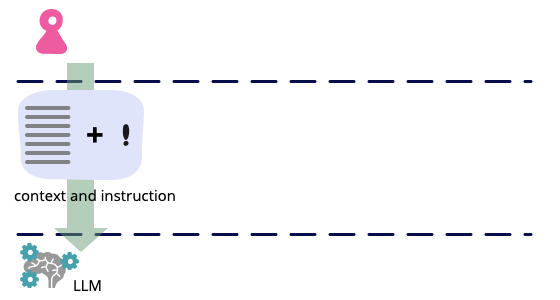

My account of an interior chat with Xu Hao, the place he presentations how he

drives ChatGPT to supply helpful self-tested code. His preliminary urged primes

the LLM with an implementation technique (chain of concept prompting). His

urged additionally asks for an implementation plan reasonably than code (normal

wisdom prompting). As soon as he has the plan he makes use of it to refine the

implementation and generate helpful sections of code.

Just lately I watched an interesting name on Zoom. Xu Hao, Thoughtworks’s

Head of Generation in China, defined his explorations in the usage of ChatGPT to

assist construct Self Checking out Code. He

went via the type of interplay that works for him.

He begins with a urged that units the context for the applying and the way

he needs the code to be structured.

The present device is a web-based whiteboard device. Tech stack: typescript, react, redux, konvajs and react-konva. And vitest, react trying out library for style, view style and comparable hooks, cypress element exams for view. All codes must be written within the tech stack discussed above. Necessities must be applied as react elements within the MVVM structure development. There are 2 sorts of view style within the device. 1. Shared view style. View style that represents states shared amongst native and far flung customers. 2. Native view style. View style that represents states simplest acceptable to native consumer Listed below are the average implementation technique: 1. Shared view style is applied as Redux shop slice. Examined in vitest. 2. Native view style is applied as React element props or states(through useState hook), except for international native view style, which could also be applied as Redux shop slice. Examined in vitest. 3. Hooks are used as the most important view helpers to retrieve information from shared view style. For many the case, it'll use âcreateSelectorâ and âuseSelectorâ for memorization. Examined in vitest and react trying out library. 4. Donât dispatch motion at once to switch the states of shared view style, use an encapsulated view style interface as a substitute. Within the interface, each and every redux motion is mapped to one way. Examined in vitest. 5. View is include konva shapes, and applied as react element by way of react-konva. Examined in cypress element exams Listed below are sure patterns must be adopted when enforce and take a look at the element 1. When write take a look at, use `describe` as a substitute of `take a look at` 2. Knowledge-driven exams are most well-liked. 3. When take a look at the view element, faux view style by way of the view style interface Consciousness Layer Requirement: Show different customersâ consciousness data(cursor, identify and on-line data) at the whiteboard. AC1: Donât show native consumer AC2: When far flung consumer adjustments cursor location, show the exchange in animation. Supply an total answer following the steering discussed above. Trace, stay all consciousness data in a Konva layer, and an consciousness data element to render cursor, and identify. Donât generate code. Describe the answer, and breaking the answer down as a job record in response to the steering discussed above. And we will be able to refer this process record as our grasp plan.

There is a lot happening with this urged, so he highlighted a couple of

issues.

He is the usage of a generic software instance in right here: something to be

cautious of when interacting with ChatGPT and the like is that we must by no means

put the rest that can be confidential into the urged, as that will be a

safety possibility. Trade laws, any code from an actual undertaking – these kind of will have to

now not input the interplay with ChatGPT.

Lots of the urged is environment out the design pointers that he needs

ChatGPT’s generated code to practice. He subtle this through hanging activates into

ChatGPT, taking a look on the end result, and crafting the urged to make it generate

within the taste he sought after. As soon as he has context operating, he can paste it into

each consultation he has with ChatGPT, and percentage it with the remainder of the

crew.

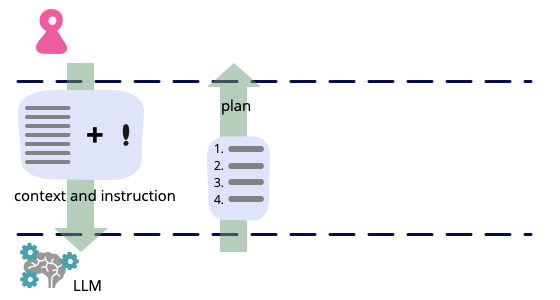

Xu Hao starts his consultation through prompting the

ChatGPT LLM with context in addition to the instruction to generate a plan

Even supposing the overall purpose of this consultation is to generate code and its

exams, at this level he in particular mentioned Donât generate code.

At this level he needs ChatGPT to stipulate its means ahead of we glance to any

code technology. This can be a urged method referred to as Generated Wisdom.

A not unusual means of interacting with an LLM is to present it an issue and ask

it to generate some ultimate product, whether or not than be prose or (on this case)

code. With Generated Wisdom we break up our interplay into separate steps:

first ask the LLM to generate some helpful details about the issue, and

then we feed that data again into the LLM to generate the overall

product.

Essentially the most attention-grabbing a part of the urged is the âimplementation techniqueâ

phase which mixes two types of urged:

- Instruction prompting supplies directions for the LLM to practice

- Chain of Concept prompting encourages the LLM to give an explanation for its

reasoning.

Within the Implementation Technique phase, Xu Hao described the required

structure development as an anticipated âChain of Concept directionsâ for

ChatGPT to practice. Then he urged ChatGPT to construct a job record (the

generated wisdom) in response to this chain of concept.

Resolution:

To enforce the attention layer characteristic, we will practice the ideas discussed in the issue remark and use the prevailing tech stack.

Process Checklist:

-

Create a brand new element named “AwarenessInfo” to render cursor and identify for far flung customers.

-

Create a brand new Konva layer to carry the entire “AwarenessInfo” elements.

-

Upload the Konva layer to the primary whiteboard element.

-

Create a brand new Redux slice to shop the attention data for far flung customers.

-

Create an encapsulated view style interface for the attention Redux slice.

-

Put in force the “AwarenessInfo” element to retrieve and show the attention data for each and every far flung consumer from the view style.

-

Put in force one way within the encapsulated view style interface to replace the attention data for each and every far flung consumer.

-

When a far flung consumer adjustments the cursor location, dispatch the replace manner within the encapsulated view style interface to replace the corresponding consciousness data.

-

Within the “AwarenessInfo” element, use the brand new location to animate the cursor exchange.

-

Write exams for the brand new “AwarenessInfo” element, together with data-driven exams to verify it correctly shows the attention data for all far flung customers.

-

Write exams for the encapsulated view style interface to verify it correctly updates the attention data for far flung customers.

-

Write end-to-end Cypress exams to verify the attention layer is correctly displayed and up to date when far flung customers exchange their cursor location.

Through following those duties, we will enforce the attention layer characteristic within the on-line whiteboard device.

The reaction from ChatGPT is non-deterministic, so this result’s simply

one run. Should you (or I) put this urged in once more, we will get a special reaction.

The important thing component of the reaction is an ordered process record, with numbers for

each and every step. We will be able to use those numbers to refer to those duties for the remainder of

the consultation.

The LLM responds with a plan.

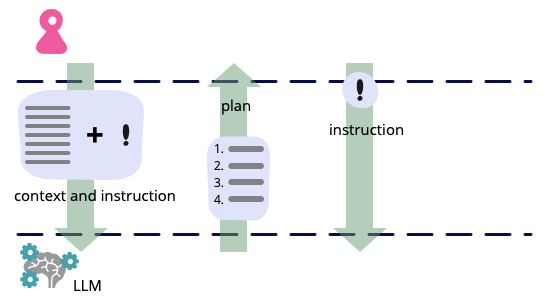

Xu Hao can now take a look at the grasp plan and notice if it is smart. If

there are issues of it, he can ask ChatGPT to re-write one of the crucial duties

or all of the grasp plan through offering additional information.

For instance, the present grasp plan was once now not grouped through differing types

of elements. One conceivable urged may well be:

Rewrite the grasp plan, and staff duties as style, view style and think about.

This may well be helpful to ensure the alignment between the structure

imaginative and prescient and grasp plan.

Those tweaks may well be added to the preliminary urged to get an total

higher reaction.For this actual urged, the next instruction was once

now not within the preliminary urged, however have been added in later iteration:

4. Donât dispatch motion at once to switch the states of shared view

style, use an encapsulated view style interface as a substitute. Within the interface,

each and every redux motion is mapped to one way. Examined in vitest.

As soon as the grasp plan appears affordable, Xu Hao asks to regenerate it with

names for this system parts.

Rewrite the grasp plan, this time with detailed element names,

strategies, and props

Certain, here is a revised grasp plan with detailed element names, strategies, and props:

Process Checklist:

-

Create a brand new element named “AwarenessInfo” to render cursor and identify for far flung customers. This element will obtain props similar to consumer identify, cursor place, and on-line standing.

-

Create a brand new Konva layer named “AwarenessLayer” to carry the entire “AwarenessInfo” elements.

-

Upload the “AwarenessLayer” to the primary whiteboard element.

-

Create a brand new Redux slice named “consciousness” to shop the attention data for far flung customers.

-

Create an encapsulated view style interface named “AwarenessViewModel” for the “consciousness” Redux slice. This interface will include one way named “updateUserAwarenessInfo” to replace the attention data for each and every far flung consumer.

-

Put in force the “AwarenessInfo” element to retrieve and show the attention data for each and every far flung consumer from the view style. The element will use the “useSelector” hook to retrieve the related data.

-

Put in force the “updateUserAwarenessInfo” manner within the “AwarenessViewModel” interface to dispatch a Redux motion to replace the attention data for each and every far flung consumer. This system shall be referred to as through the “MainWhiteboard” element.

-

When a far flung consumer adjustments the cursor location, dispatch the “updateUserAwarenessInfo” manner within the “AwarenessViewModel” interface to replace the corresponding consciousness data.

-

Within the “AwarenessInfo” element, use the brand new location to animate the cursor exchange. This will also be completed the usage of the Konva “Tween” animation.

-

Write exams for the brand new “AwarenessInfo” element, together with data-driven exams to verify it correctly shows the attention data for all far flung customers. The exams shall be written the usage of the React Checking out Library.

-

Write exams for the encapsulated view style interface to verify it correctly updates the attention data for far flung customers. Those exams will use the Vitest framework.

-

Write end-to-end Cypress exams to verify the attention layer is correctly displayed and up to date when far flung customers exchange their cursor location. The exams will simulate consumer interactions with the whiteboard and check the state of the “AwarenessLayer” and “AwarenessInfo” elements.

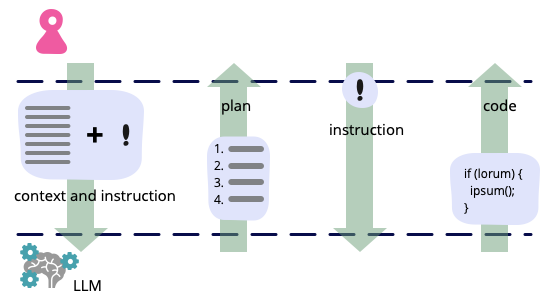

At this level, Xu Hao is able to ask ChatGPT to supply code for one in all

the stairs

supply me instance exams and implementation for the attention slice

and AwarenessViewModel. Check first.

Certain, let's get started with writing exams for the attention slice and AwarenessViewModel:

Check for Consciousness Slice:

describe('consciousness slice', () => {

let shop: MockStore;

beforeEach(() => {

shop = configureMockStore()(initialState);

});

it('must set the cursor location', () => {

shop.dispatch(setCursorLocation({ x: 100, y: 200 }));

const state = shop.getState().consciousness;

be expecting(state.cursorLocation).toEqual({ x: 100, y: 200 });

});

it('must set the identify', () => {

shop.dispatch(setName('John Doe'));

const state = shop.getState().consciousness;

be expecting(state.identify).toBe('John Doe');

});

it('must set the net standing', () => {

shop.dispatch(setOnline(true));

const state = shop.getState().consciousness;

be expecting(state.isOnline).toBe(true);

});

});

Check for AwarenessViewModel:

describe('AwarenessViewModel', () => {

let shop: MockStore;

let awarenessViewModel: AwarenessViewModel;

beforeEach(() => {

shop = configureMockStore()(initialState);

awarenessViewModel = new AwarenessViewModel(shop);

});

it('must set the cursor location', () => {

awarenessViewModel.setCursorLocation({ x: 100, y: 200 });

const state = shop.getState().consciousness;

be expecting(state.cursorLocation).toEqual({ x: 100, y: 200 });

});

it('must set the identify', () => {

awarenessViewModel.setName('John Doe');

const state = shop.getState().consciousness;

be expecting(state.identify).toBe('John Doe');

});

it('must set the net standing', () => {

awarenessViewModel.setOnline(true);

const state = shop.getState().consciousness;

be expecting(state.isOnline).toBe(true);

});

});

Now, let's enforce the Consciousness slice and AwarenessViewModel:

Consciousness slice:

interface AwarenessState {

cursorLocation: { x: quantity; y: quantity };

identify: string;

isOnline: boolean;

}

const initialState: AwarenessState = {

cursorLocation: { x: 0, y: 0 },

identify: '',

isOnline: false,

};

const awarenessSlice = createSlice({

identify: 'consciousness',

initialState,

reducers: {

setCursorLocation: (state, motion: PayloadAction) => {

state.cursorLocation = motion.payload;

},

setName: (state, motion: PayloadAction) => {

state.identify = motion.payload;

},

setOnline: (state, motion: PayloadAction) => {

state.isOnline = motion.payload;

},

},

});

export const { setCursorLocation, setName, setOnline } = awarenessSlice.movements;

export default awarenessSlice.reducer;

AwarenessViewModel:

magnificence AwarenessViewModel {

non-public shop: Retailer;

constructor(shop: Retailer) {

this.shop = shop;

}

setCursorLocation(cursorLocation: { x: quantity; y: quantity }) {

this.shop.dispatch(setCursorLocation(cursorLocation));

}

setName(identify: string) {

this.shop.dispatch(setName(identify));

}

setOnline(isOnline: boolean) {

this.shop.dispatch(setOnline(isOnline));

}

}

Word: On this implementation, I thought that the AwarenessViewModel is used to

set the values within the shop. If it is usually used to retrieve the values from the

shop, we will upload corresponding find out how to the AwarenessViewModel magnificence

(I have got rid of the styling from ChatGPT’s reaction.)

General this code is neither dangerous nor absolute best. It would possibly not run because it stands.

It is lacking a configureMockStore and assumes it makes use of

redux-mock-store, which is not a part of the tech stack. Xu Hao can repair those

issues through asking the LLM to step by step tweak the code via a

series of rewrites. This is one to get rig of redux-mock-store.

rewrite the exams with out redux-mock-store

Certain, here is an instance implementation of the exams for the AwarenessViewModel and the attention slice with out the usage of redux-mock-store:

import { createAwarenessSlice, AwarenessState, updateAwareness } from './awarenessSlice';

import { AwarenessViewModel } from './AwarenessViewModel';

describe('AwarenessViewModel', () => {

const mockDispatch = jest.fn();

const initialState: AwarenessState = {

customers: {

'user1': {

identify: 'Consumer 1',

cursorPosition: { x: 0, y: 0 },

on-line: true,

},

'user2': {

identify: 'Consumer 2',

cursorPosition: { x: 100, y: 100 },

on-line: true,

},

},

};

let viewModel: AwarenessViewModel;

beforeEach(() => {

viewModel = new AwarenessViewModel(initialState, mockDispatch);

});

describe('getUserNames', () => {

it('returns the record of consumer names', () => {

const names = viewModel.getUserNames();

be expecting(names).toEqual(['User 1', 'User 2']);

});

});

describe('getCursorPosition', () => {

it('returns the cursor place for a given consumer ID', () => {

const cursorPosition = viewModel.getCursorPosition('user1');

be expecting(cursorPosition).toEqual({ x: 0, y: 0 });

});

it('returns undefined for an unknown consumer ID', () => {

const cursorPosition = viewModel.getCursorPosition('unknown');

be expecting(cursorPosition).toBeUndefined();

});

});

describe('updateCursorPosition', () => {

it('dispatches the updateAwareness motion with the brand new cursor place', () => {

viewModel.updateCursorPosition('user1', { x: 50, y: 50 });

be expecting(mockDispatch).toHaveBeenCalledWith(updateAwareness({

consumer: 'user1',

cursorPosition: { x: 50, y: 50 },

}));

});

});

});

And here is an instance implementation of the awarenessSlice with out the usage of redux-mock-store:

import { createSlice, PayloadAction } from '@reduxjs/toolkit';

export kind CursorPosition = { x: quantity, y: quantity };

export kind UserAwareness = {

identify: string;

cursorPosition: CursorPosition;

on-line: boolean;

};

export kind AwarenessState = {

customers: Document<string, UserAwareness>;

};

const initialState: AwarenessState = {

customers: {},

};

const awarenessSlice = createSlice({

identify: 'consciousness',

initialState,

reducers: {

updateAwareness: (state, motion: PayloadAction<{ consumer: string, cursorPosition: CursorPosition }>) => {

const { consumer, cursorPosition } = motion.payload;

state.customers[user].cursorPosition = cursorPosition;

},

setOnline: (state, motion: PayloadAction<{ consumer: string, on-line: boolean }>) => {

const { consumer, on-line } = motion.payload;

state.customers[user].on-line = on-line;

},

},

});

export const { updateAwareness, setOnline } = awarenessSlice.movements;

export const awarenessReducer = awarenessSlice.reducer;

Word that since we aren’t the usage of redux-mock-store, we want to cross a ridicule dispatch serve as to the view style constructor, and within the take a look at for updateCursorPosition we want to take a look at that the right kind motion was once dispatched the usage of jest.fn().

Additional rewrites can have compatibility in additional changes, similar to asking it to

Use `configureTestingStore(âconsciousnessâ, initialState)` to initialize can get it to slot in with the crew’s take a look at idioms. Then

the take a look at shop

with some trail changes and a small quantity of glue for the reducers, the

code can run simply positive.

As soon as that is operating, Xu Hao can repeat the method for the remainder of the

duties within the grasp plan.

My remove from this dialogue was once that the usage of chain of concept and

generated wisdom prompting approaches could be a considerably great tool

for programming. Particularly it presentations that to make use of LLMs smartly, we want to

discover ways to assemble activates to get the most productive effects. This enjoy

means that it is helpful to engage with the LLM like a junior spouse,

beginning them with architectural pointers, asking them to turn their

reasoning, and tweaking their outputs as we pass.