This is sequel in Rockset’s Understanding Real-Time Analytics on Streaming Data series. In part 1, we covered the innovation landscape for real-time analytics on streaming information. In this post, we’ll check out the distinctions in between real-time analytics databases and stream processing structures. In the coming weeks we’ll release the following:

- Part 3 will use suggestions for operationalizing streaming information, consisting of a couple of sample architectures

- Part 4 will include a case research study highlighting an effective execution of real-time analytics on streaming information

Unless you’re currently knowledgeable about fundamental streaming information principles, please have a look at part 1 due to the fact that we’re going to presume some level of working understanding. With that, let’s dive in.

Varying Paradigms

Stream processing systems and real-time analytics (RTA) databases are both blowing up in appeal. Nevertheless, it’s tough to discuss their distinctions in regards to “functions”, due to the fact that you can utilize either for nearly any pertinent usage case. It’s simpler to discuss the various techniques they take. This blog site will clarify some conceptual distinctions, supply an introduction of popular tools, and use a structure for choosing which tools are best matched for particular technical requirements.

Let’s begin with a fast summary of both stream processing and RTA databases. Stream processing systems enable you to aggregate, filter, sign up with, and evaluate streaming information. “Streams”, instead of tables in a relational database context, are the first-rate people in stream processing. Stream processing estimates something like a constant question; each occasion that goes through the system is examined according to pre-defined requirements and can be taken in by other systems. Stream processing systems are hardly ever utilized as relentless storage. They’re a “procedure”, not a “shop”, which brings us to …

Real-time analytics databases are regularly utilized for relentless storage (though there are exceptions) and have actually a bounded context instead of an unbounded context. These databases can consume streaming occasions, index the information, and allow millisecond-latency analytical inquiries versus that information. Real-time analytics databases have a great deal of overlap with stream processing; they both allow you to aggregate, filter, sign up with, and evaluate high volumes streaming information for usage cases like anomaly detection, customization, logistics, and more. The greatest distinction in between RTA databases and stream processing tools is that databases supply relentless storage, bounded inquiries, and indexing abilities.

So do you require simply one? Both? Let’s enter into the information.

Stream Processing … How Does It Work?

Stream processing tools control streaming information as it streams through a streaming information platform (Kafka being among the most popular choices, however there are others). This processing takes place incrementally, as the streaming information shows up.

Stream processing systems normally utilize a directed acyclic chart (DAG), with nodes that are accountable for various functions, such as aggregations, filtering, and signs up with. The nodes operate in a daisy-chain style. Information shows up, it strikes one node and is processed, and after that passes the processed information to the next node. This continues up until the information has actually been processed according to predefined requirements, described as a geography. Nodes can survive on various servers, linked by a network, as a method to scale horizontally to manage huge volumes of information. This is what’s suggested by a “constant question”. Information can be found in, it’s changed, and its outcomes are created constantly. When the processing is total, other applications or systems can register for the processed stream and utilize it for analytics or within an application or service. One extra note: while lots of stream processing platforms support declarative languages like SQL, they likewise support Java, Scala, or Python, which are suitable for sophisticated usage cases like artificial intelligence.

Stateful Or Not?

Stream processing operations can either be stateless or stateful. Stateless stream processing is far easier. A stateless procedure does not depend contextually on anything that came prior to it. Envision an occasion including purchase info. If you have a stream processor removing any purchase listed below $50, that operation is independent of other occasions, and for that reason stateless.

Stateful stream processing considers the history of the information. Each inbound product depends not just by itself material, however on the material of the previous product (or several previous products). State is needed for operations like running overalls in addition to more intricate operations that sign up with information from one stream to another.

For instance, think about an application that processes a stream of sensing unit information. Let’s state that the application requires to calculate the typical temperature level for each sensing unit over a particular time window. In this case, the stateful processing reasoning would require to preserve a running overall of the temperature level readings for each sensing unit, in addition to a count of the variety of readings that have actually been processed for each sensing unit. This info would be utilized to calculate the typical temperature level for each sensing unit over the defined period or window.

These state classifications belong to the “constant question” idea that we talked about in the intro. When you query a database, you’re querying the present state of its contents. In stream processing, a constant, stateful question needs keeping state individually from the DAG, which is done by querying a state shop, i.e. an ingrained database within the structure. State shops can live in memory, on disk, or in deep storage, and there is a latency/ expense tradeoff for each.

Stateful stream processing is rather intricate. Architectural information are beyond the scope of this blog site, however here are 4 difficulties intrinsic in stateful stream processing:

- Handling state is costly: Keeping and upgrading the state needs considerable processing resources. The state needs to be upgraded for each inbound information product, and this can be tough to do effectively, particularly for high-throughput information streams.

- It is difficult to manage out-of-order information: this is an outright need to for all stateful stream processing. If information shows up out of order, the state requires to be fixed and upgraded, which includes processing overhead.

- Fault tolerance takes work: Substantial actions need to be required to make sure information is not lost or damaged in case of a failure. This needs robust systems for checkpointing, state duplication, and healing.

- Debugging and screening is challenging: The intricacy of the processing reasoning and stateful context can make replicating and identifying mistakes in these systems tough. Much of this is because of the dispersed nature of stream processing systems – several parts and several information sources make origin analysis an obstacle.

While stateless stream processing has worth, the more fascinating usage cases need state. Handling state makes stream processing tools harder to deal with than RTA databases.

Where Do I Start With Processing Tools?

In the previous couple of years, the variety of offered stream processing systems has actually grown considerably. This blog site will cover a few of the huge gamers, both open source and totally handled, to provide readers a sense of what’s offered

Apache Flink

Apache Flink is an open-source, dispersed structure developed to carry out real-time stream processing. It was established by the Apache Software Application Structure and is composed in Java and Scala. Flink is among the more popular stream processing structures due to its versatility, efficiency, and neighborhood (Lyft, Uber, and Alibaba are all users, and the open-source neighborhood for Flink is rather active). It supports a wide array of information sources and programs languages, and – naturally – supports stateful stream processing.

Flink utilizes a dataflow programs design that permits it to evaluate streams as they are created, instead of in batches. It counts on checkpoints to properly process information even if a subset of nodes stop working. This is possible due to the fact that Flink is a dispersed system, however beware that its architecture needs substantial proficiency and functional maintenance to tune, preserve, and debug.

Apache Glow Streaming

Glow Streaming is another popular stream processing structure, is likewise open source, and is suitable for high intricacy, high-volume usage cases.

Unlike Flink, Glow Streaming utilizes a micro-batch processing design, where inbound information is processed in little, fixed-size batches. This leads to greater end-to-end latencies. When it comes to fault tolerance, Glow Streaming utilizes a system called “ RDD family tree” to recuperate from failures, which can often trigger considerable overhead in processing time. There’s assistance for SQL through the Glow SQL library, however it’s more restricted than other stream processing libraries, so check that it can support your usage case. On the other hand, Glow Streaming has actually been around longer than other systems, that makes it simpler to discover finest practices and even complimentary, open-source code for typical usage cases.

Confluent Cloud and ksqlDB

Since today, Confluent Cloud’s main stream processing offering is ksqlDB, which integrates KSQL’s familiar SQL-esque syntax with extra functions such as adapters, a relentless question engine, windowing, and aggregation.

One essential function of ksqlDB is that it’s a fully-managed service, that makes it easier to release and scale. Contrast this to Flink, which can be released in a range of setups, consisting of as a standalone cluster, on YARN, or on Kubernetes (note that there are likewise fully-managed variations of Flink). ksqlDB supports a SQL-like question language, offers a series of integrated functions and operators, and can likewise be extended with custom-made user-defined functions (UDFs) and operators. ksqlDB is likewise firmly incorporated with the Kafka environment and is developed to work effortlessly with Kafka streams, subjects, and brokers.

However Where Will My Information Live?

Real-time analytics (RTA) databases are unconditionally various than stream processing systems. They come from an unique and growing market, and yet have some overlap in performance. For an introduction on what we suggest by “RTA database”, have a look at this guide

In the context of streaming information, RTA databases are utilized as a sink for streaming information. They are likewise helpful for real-time analytics and information applications, however they dish out information when they’re queried, instead of constantly. When you consume information into an RTA database, you have the alternative to set up consume changes, which can do things like filter, aggregate, and in many cases sign up with information constantly. The information lives in a table, which you can not “subscribe” to the exact same method you can with streams.

Besides the table vs. stream difference, another essential function of RTA databases is their capability to index information; stream processing structures index extremely directly, while RTA databases have a big menu of choices. Indexes are what enable RTA databases to serve millisecond-latency inquiries, and each kind of index is enhanced for a specific question pattern. The very best RTA database for an offered usage case will frequently boil down to indexing choices. If you’re wanting to carry out extremely quick aggregations on historic information, you’ll likely pick a column-oriented database with a main index. Seeking to search for information on a single order? Select a database with an inverted index. The point here is that every RTA database alters indexing choices. The very best option will depend upon your question patterns and consume requirements.

One last point of contrast: enrichment. In fairness, you can improve streaming information with extra information in a stream processing structure. You can basically “sign up with” (to utilize database parlance) 2 streams in genuine time. Inner signs up with, left or best signs up with, and complete external signs up with are all supported in stream processing. Depending upon the system, you can likewise query the state to sign up with historic information with live information. Feel in one’s bones that this can be tough; there are lots of tradeoffs to be made around expense, intricacy, and latency. RTA databases, on the other hand, have easier techniques for enhancing or signing up with information. A typical technique is denormalizing, which is basically flattening and aggregating 2 tables. This technique has its concerns, however there are other choices also. Rockset, for instance, has the ability to carry out inner signs up with on streaming information at consume, and any kind of sign up with at question time.

The outcome of RTA databases is that they allow users to carry out complex, millisecond-latency inquiries versus information that’s 1-2 seconds old. Both stream processing structures and RTA databases enable users to change and serve information. They both use the capability to improve, aggregate, filter, and otherwise evaluate streams in genuine time.

Let’s enter into 3 popular RTA databases and assess their strengths and weak points

Elasticsearch

Elasticsearch is an open-source, dispersed search database that permits you to shop, search, and evaluate big volumes of information in near real-time. It’s rather scalable ( with work and proficiency), and frequently utilized for log analysis, full-text search, and real-time analytics.

In order to improve streaming information with extra information in Elasticsearch, you require to denormalize it This needs aggregating and flattening information prior to intake. Many stream processing tools do not need this action. Elasticsearch users normally see high-performance for real-time analytical inquiries on text fields. Nevertheless, if Elasticsearch gets a high volume of updates, efficiency deteriorates considerably. Moreover, when an upgrade or place takes place upstream, Elasticsearch needs to reindex that information for each of its reproductions, which takes in calculate resources. Numerous streaming information utilize cases are added just, however lots of are not; think about both your upgrade frequency and denormalization prior to picking Elasticsearch.

Apache Druid

Apache Druid is a high-performance, column-oriented, information save that is developed for sub-second analytical inquiries and real-time information intake. It is typically referred to as a timeseries database, and stands out at filtering and aggregations. Druid is a dispersed system, frequently utilized in huge information applications. It’s understood for both efficiency and being challenging to operationalize.

When it concerns changes and enrichment, Druid has the exact same denormalization difficulties as Elasticsearch. If you’re counting on your RTA database to sign up with several streams, think about dealing with those operations in other places; denormalizing is a discomfort. Updates provide a comparable obstacle. If Druid consumes an upgrade from streaming information, it needs to reindex all information in the impacted sector, which is a subset of information representing a time variety. This presents both latency and calculate expense. If your work is update-heavy, think about picking a various RTA database for streaming information. Lastly, It deserves keeping in mind that there are some SQL includes that are not supported by Druid’s question language, such as subqueries, associated inquiries, and complete external signs up with.

Rockset

Rockset is a fully-managed real-time analytics database constructed for the cloud – there’s absolutely nothing to handle or tune. It makes it possible for millisecond-latency, analytical inquiries utilizing full-featured SQL. Rockset is well matched to a wide array of question patterns due to its Converged Index( â¢), which integrates a column index, a row index, and a search index. Rockset’s custom-made SQL question optimizer instantly evaluates each question and selects the suitable index based upon the fastest question strategy. Furthermore, its architecture enables complete seclusion of calculate utilized for consuming information and calculate utilized for querying information (more information here).

When it concerns changes and enrichment, Rockset has much of the exact same abilities as stream processing structures. It supports signing up with streams at consume (inner signs up with just), enhancing streaming information with historic information at question time, and it completely prevents denormalization. In reality, Rockset can consume and index schemaless occasions information, consisting of deeply embedded things and ranges. Rockset is a totally mutable database, and can manage updates without efficiency charge. If ease of usage and cost/ efficiency are very important aspects, Rockset is a perfect RTA database for streaming information. For a much deeper dive on this subject, have a look at this blog site

Finishing Up

Stream processing structures are well matched for enhancing streaming information, filtering and aggregations, and advanced usage cases like image acknowledgment and natural language processing. Nevertheless, these structures are not normally utilized for relentless storage and have just fundamental assistance for indexes – they frequently need an RTA database for keeping and querying information. Even more, they need considerable proficiency to establish, tune, preserve, and debug. Stream processing tools are both effective and high upkeep.

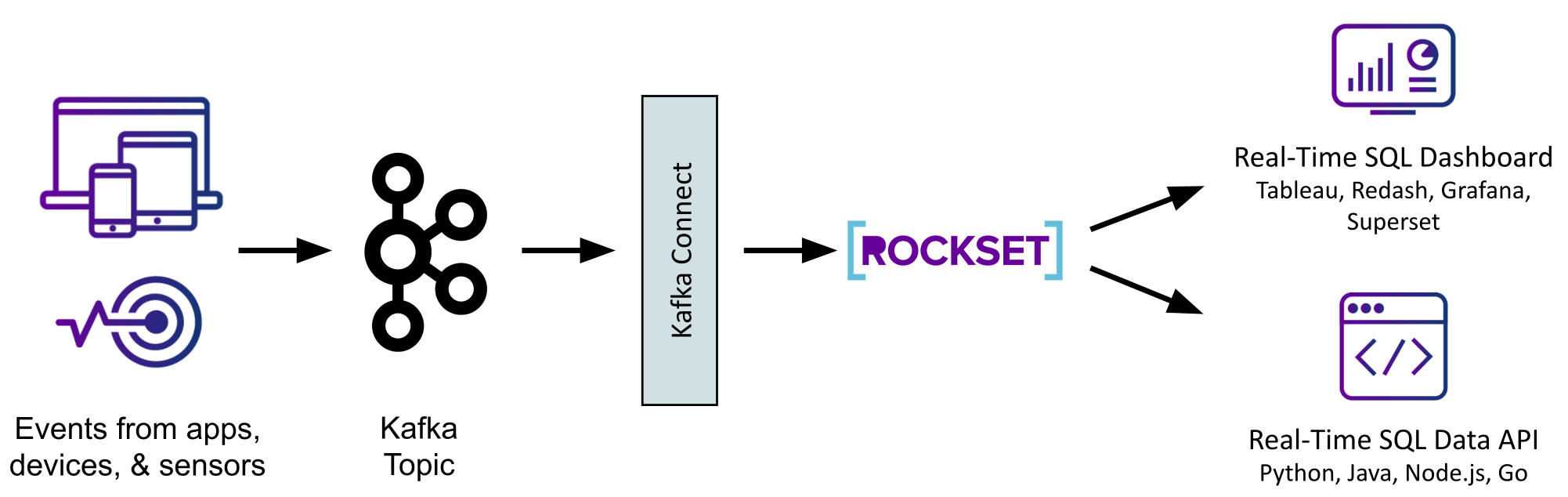

RTA databases are perfect stream processing sinks. Their assistance for high-volume consume and indexing allow sub-second analytical inquiries on real-time information. Connectors for lots of other typical information sources, like information lakes, storage facilities, and databases, permit a broad variety of enrichment abilities. Some RTA databases, like Rockset, likewise support streaming signs up with, filtering, and aggregations at consume.

The next post in the series will describe how to operationalize RTA databases for sophisticated analytics on streaming information. In the meantime, if you want to get wise on Rockset’s real-time analytics database, you can begin a complimentary trial today. We supply $300 in credits and do not need a charge card number. We likewise have lots of sample information sets that imitate the attributes of streaming information. Go on and kick the tires.

.