Apache Druid is a distributed real-time analytics database commonly used with user activity streams, clickstream analytics, and Internet of things (IoT) device analytics. Druid is often helpful in use cases that prioritize real-time ingestion and fast queries.

Druid’s list of features includes individually compressed and indexed columns, various stream ingestion connectors and time-based partitioning. It is known to perform efficiently when used as designed: to perform fast queries on large amounts of data. However, using Druid can be problematic when used outside its normal parameters — for example, to work with nested data.

In this article, we’ll discuss ingesting and using nested data in Apache Druid. Druid doesn’t store nested data in the form often found in, say, a JSON dataset. So, ingesting nested data requires us to flatten our data before or during ingestion.

Flattening Your Data

We can flatten data before or during ingestion using Druid’s field flattening specification. We can also use other tools and scripts to help flatten nested data. Our final requirements and import data structure determine the flattening choice.

Several text processors help flatten data, and one of the most popular is jq. jq is like JSON’s grep, and a jq command is like a filter that outputs to the standard output. Chaining filters through piping allows for powerful processing operations on JSON data.

For the following two examples, we’ll create the governors.json file. Using your favorite text editor, create the file and copy the following lines into it:

[

{

"state": "Mississippi",

"shortname": "MS",

"info": {"governor": "Tate Reeves"},

"county": [

{"name": "Neshoba", "population": 30000},

{"name": "Hinds", "population": 250000},

{"name": "Atlanta", "population": 19000}

]

},

{

"state": "Michigan",

"shortname": "MI",

"info": {"governor": "Gretchen Whitmer"},

"county": [

{"name": "Missauki", "population": 15000},

{"name": "Benzie", "population": 17000}

]

}

]

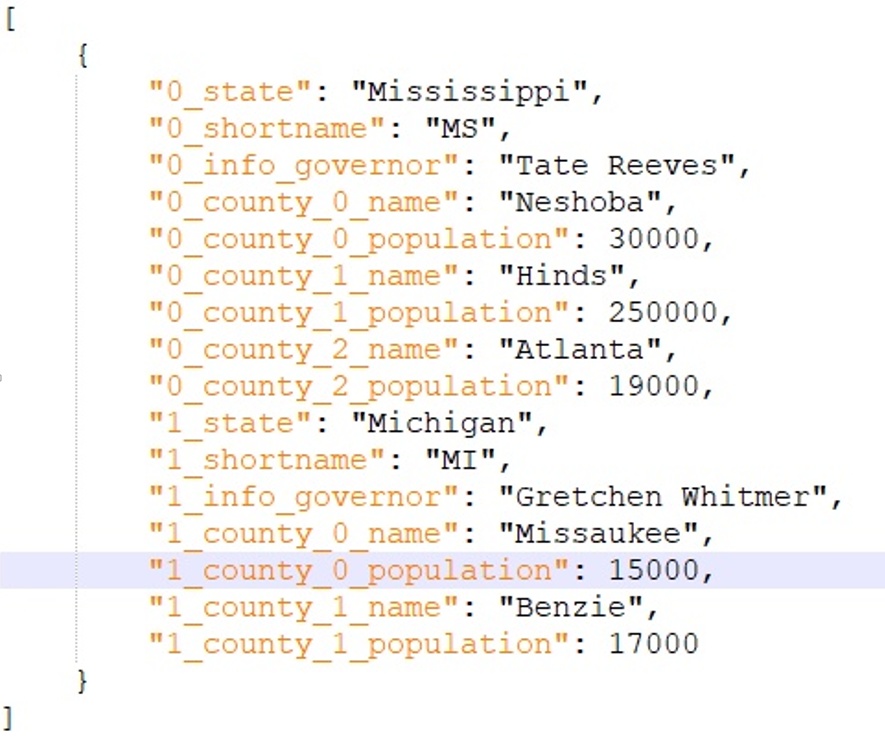

With jq installed, run the following from the command line:

$ jq --arg delim '_' 'reduce (tostream|select(length==2)) as $i ({};

.[[$i[0][]|tostring]|join($delim)] = $i[1]

)' governors.json

The results are:

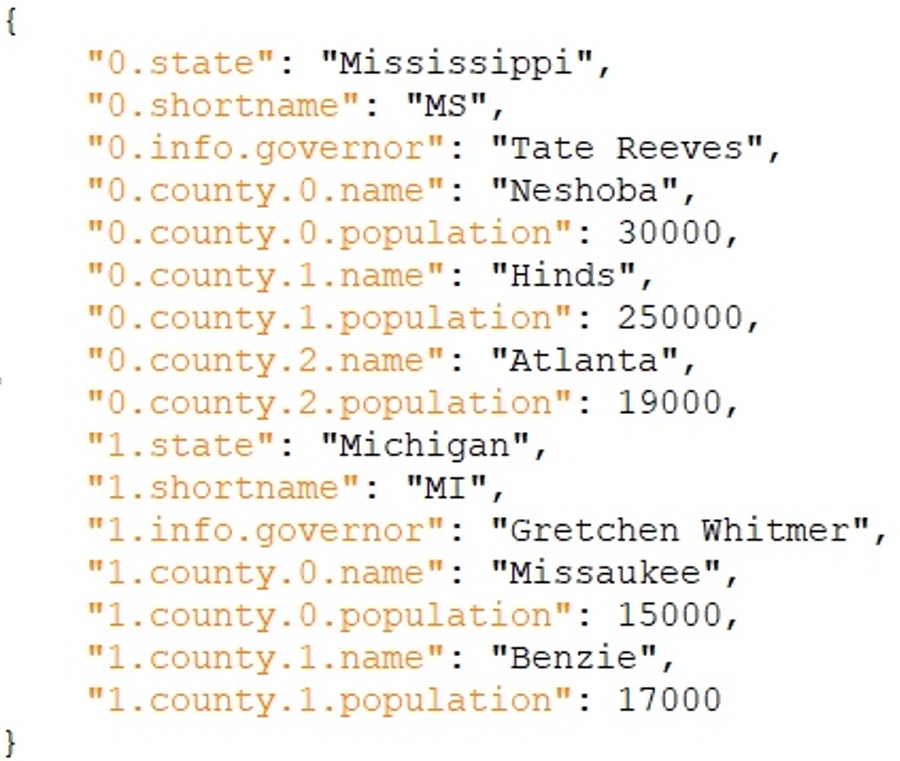

The most flexible data-flattening method is to write a script or program. Any programming language will do for this. For demonstration purposes, let’s use a recursive method in Python.

def flatten_nested_json(nested_json):

out = {}

def flatten(njson, name=""):

if type(njson) is dict:

for path in njson:

flatten(njson[path], name + path + ".")

elif type(njson) is list:

i = 0

for path in njson:

flatten(path, name + str(i) + ".")

i += 1

else:

out[name[:-1]] = njson

flatten(nested_json)

return out

The results look like this:

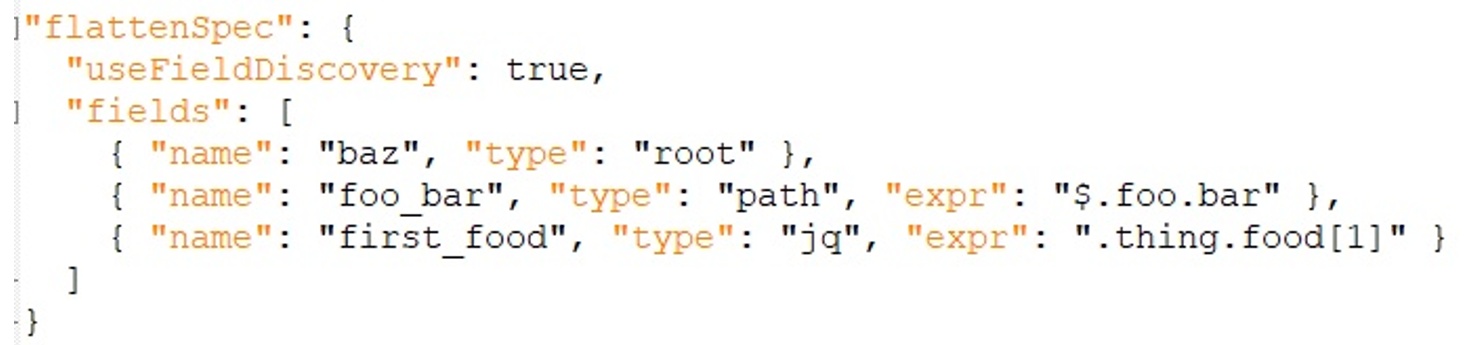

Flattening can also be achieved during the ingestion process. The FlattenSpec is part of Druid’s ingestion specification. Druid applies it first during the ingestion process.

The column names defined here are available to other parts of the ingestion specification. The FlattenSpec only applies when the data format is JSON, Avro, ORC, or Parquet. Of these, JSON is the only one that requires no further extensions in Druid. In this article, we’re discussing ingestion from JSON data sources.

The FlattenSpec takes the form of a JSON structure. The following example is from the Druid documentation and covers all of our discussion points in the specification:

The useFieldDiscovery flag is set to true above. This allows the ingestion specification to access all fields on the root node. If this flag were to be false, we’d add an entry for each column we wished to import.

In addition to root, there are two other field definition types. The path field definition contains an expression of type JsonPath. The “jq” type contains an expression with a subset of jq commands called jackson-jq. The ingestion process uses these commands to flatten our data.

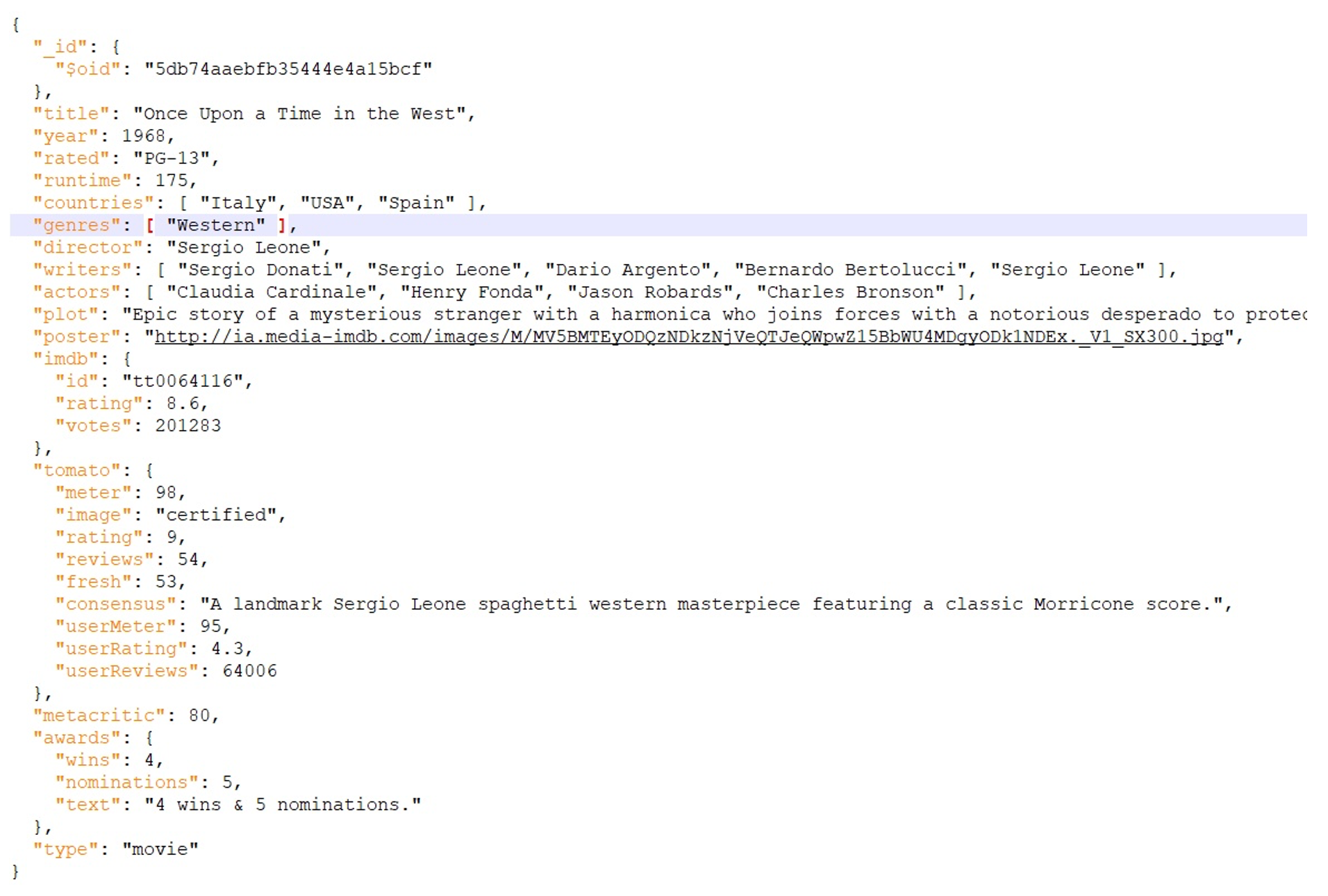

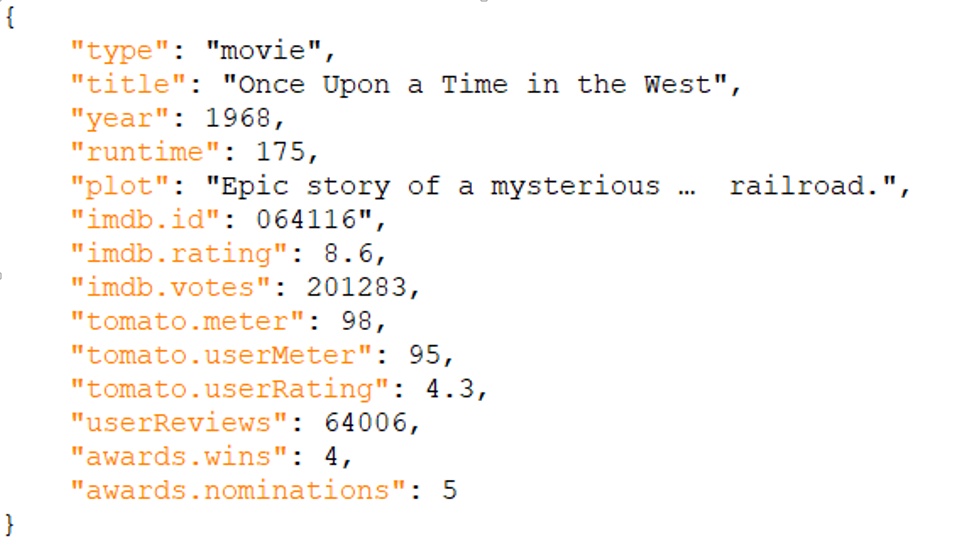

To explore this in more depth, we’ll use a subset of IMDB, converted to JSON format. The data has the following structure:

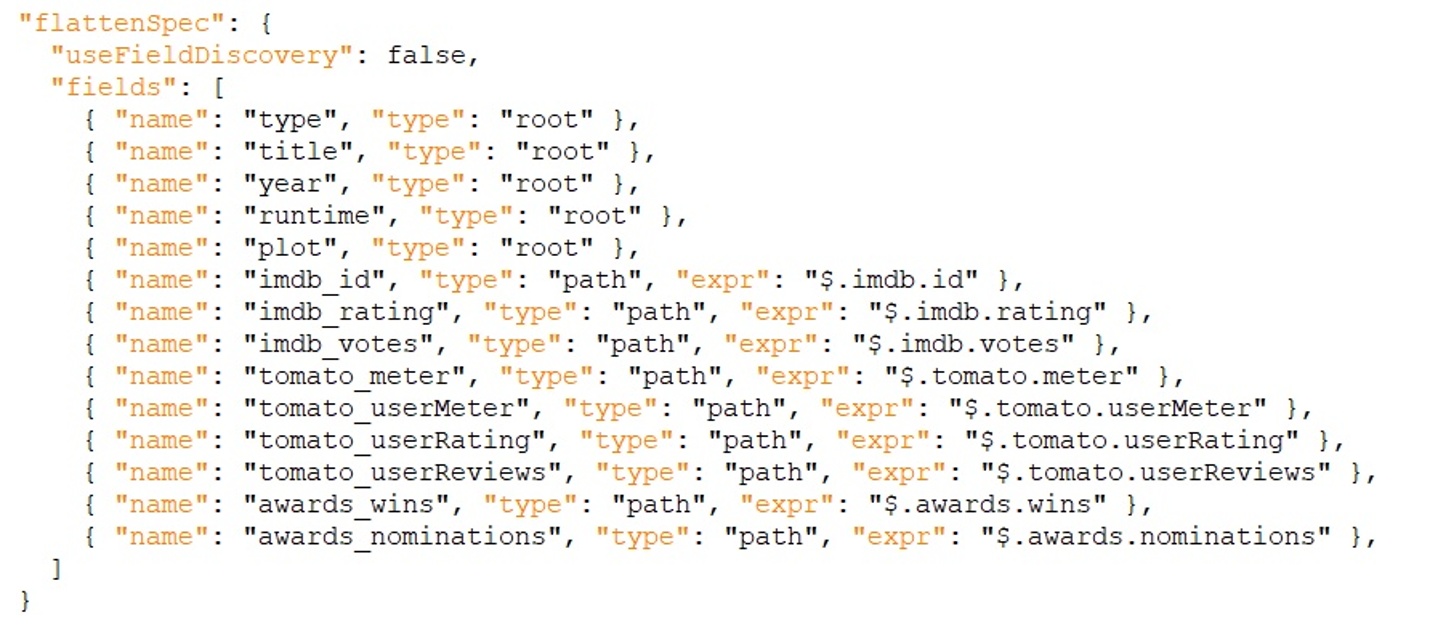

Since we are not importing all the fields, we don’t use the automatic field discovery option.

Our FlattenSpec looks like this:

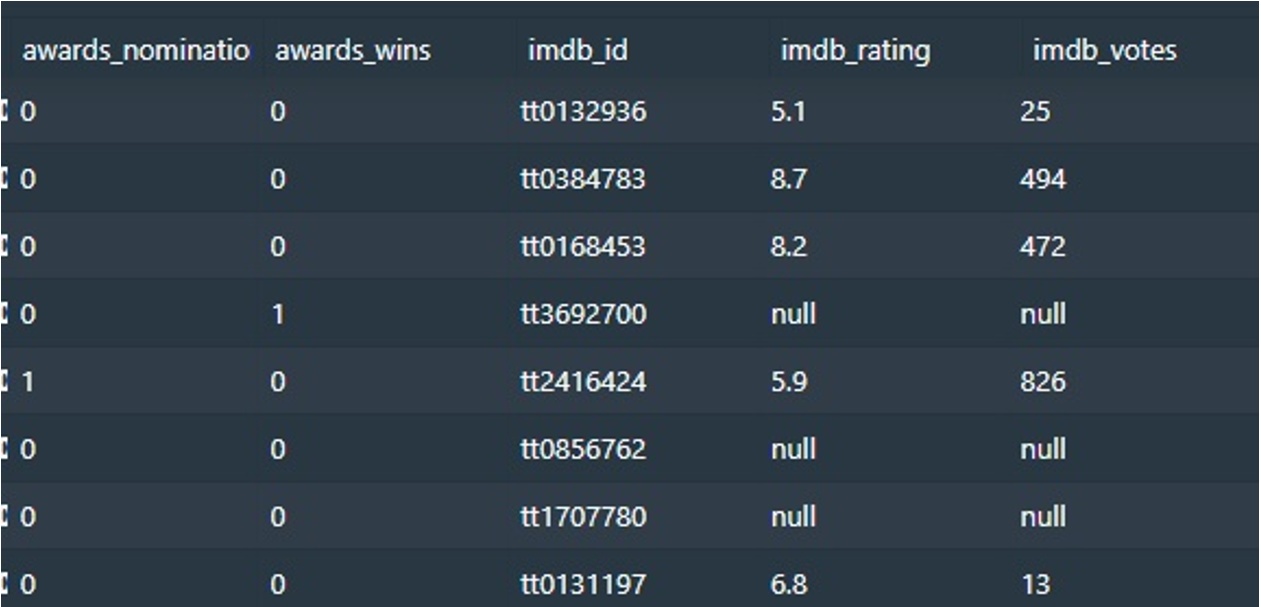

The newly created columns in the ingested data are displayed below:

Querying Flattened Data

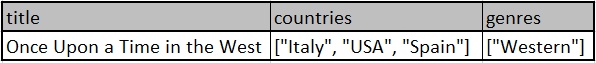

On the surface, it seems that querying denormalized data shouldn’t present a problem. But it may not be as straightforward as it seems. The only non-simple data type Druid supports is multi-value string dimensions.

The relationships between our columns dictate how we flatten your data. For example, consider a data structure to determine these three data points:

- The distinct count of movies released in Italy OR released in the USA

- The distinct count of movies released in Italy AND released in the USA

- The distinct count of movies that are westerns AND released in the USA

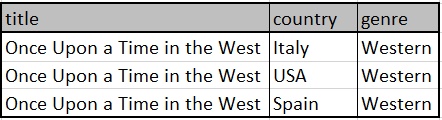

Simple flattening of the country and genre columns produces the following:

With the above structure, it’s not possible to get the distinct count of movies that are released in Italy AND released in the USA because there are no rows where country = “Italy” AND country = “USA”.

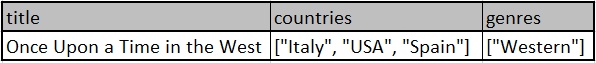

Another option is to import data as multi-value dimensions:

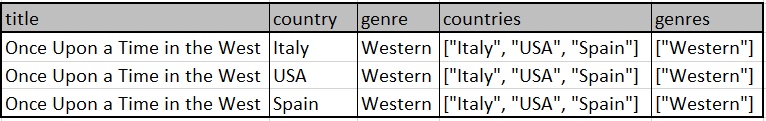

In this case, we can determine the “Italy” AND/OR “USA” number using the LIKE operator, but not the relationship between countries and genres. One organization proposed an alternative flattening, where Druid imports both the data and list:

In this case, all three distinct counts are possible using:

- Country = ‘Italy’ OR County = ‘USA’

- Countries LIKE ‘Italy’ AND Countries LIKE ‘USA’

- Genre = ‘Western’ AND Countries LIKE ‘USA’

Alternatives to Flattening Data

In Druid, it’s preferable to use flat data sources. Yet, flattening may not always be an option. For example, we may want to change dimension values post-ingestion without re-ingesting. Under these circumstances, we want to use a lookup for the dimension.

Also, in some circumstances, joins are unavoidable due to the nature and use of the data. Under these conditions, we want to split the data into one or more separate files during ingestion. Then, we can adapt the affected dimension to link to the “external” data whether by lookup or join.

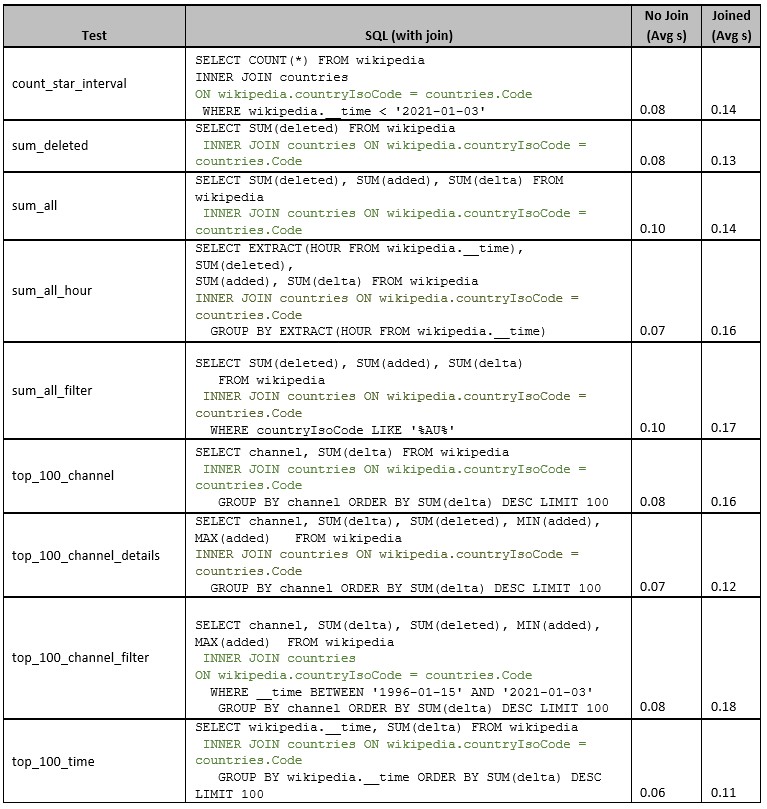

The memory-resident lookup is fast by design. All lookup tables must fit in memory, and when this isn’t possible, a join is unavoidable. Unfortunately, joins come at a performance cost in Druid. To show this cost, we’ll perform a simple join on a data source. Then we’ll measure the time to run the query with and without the join.

To ensure this test was measurable, we installed Druid on an old 4GB PC running Ubuntu Server. We then ran a series of queries adapted from those Xavier Léauté used when benchmarking Druid in 2014. Although this isn’t the best approach to joining data, it does show how a simple join affects performance.

As the chart demonstrates, each join makes the query run a few seconds slower — up to twice as slow as queries without joins. This delay adds up as your number of joins increases.

Nested Data in Druid vs Rockset

Apache Druid is good at doing what it was designed to do. Issues occur when Druid works outside those parameters, such as when using nested data.

Available solutions to cope with nested data in Druid are, at best, clunky. A change in the input data requires adapting your ingestion method. This is true whether using Druid’s native flattening or some form of pre-processing.

Contrast this with Rockset, a real-time analytics database that fully supports the ingestion and querying of nested data, making it available for fast queries. The ability to handle nested data as is saves a lot of data engineering effort in flattening data, or otherwise working around this limitation, as we explored earlier in the blog.

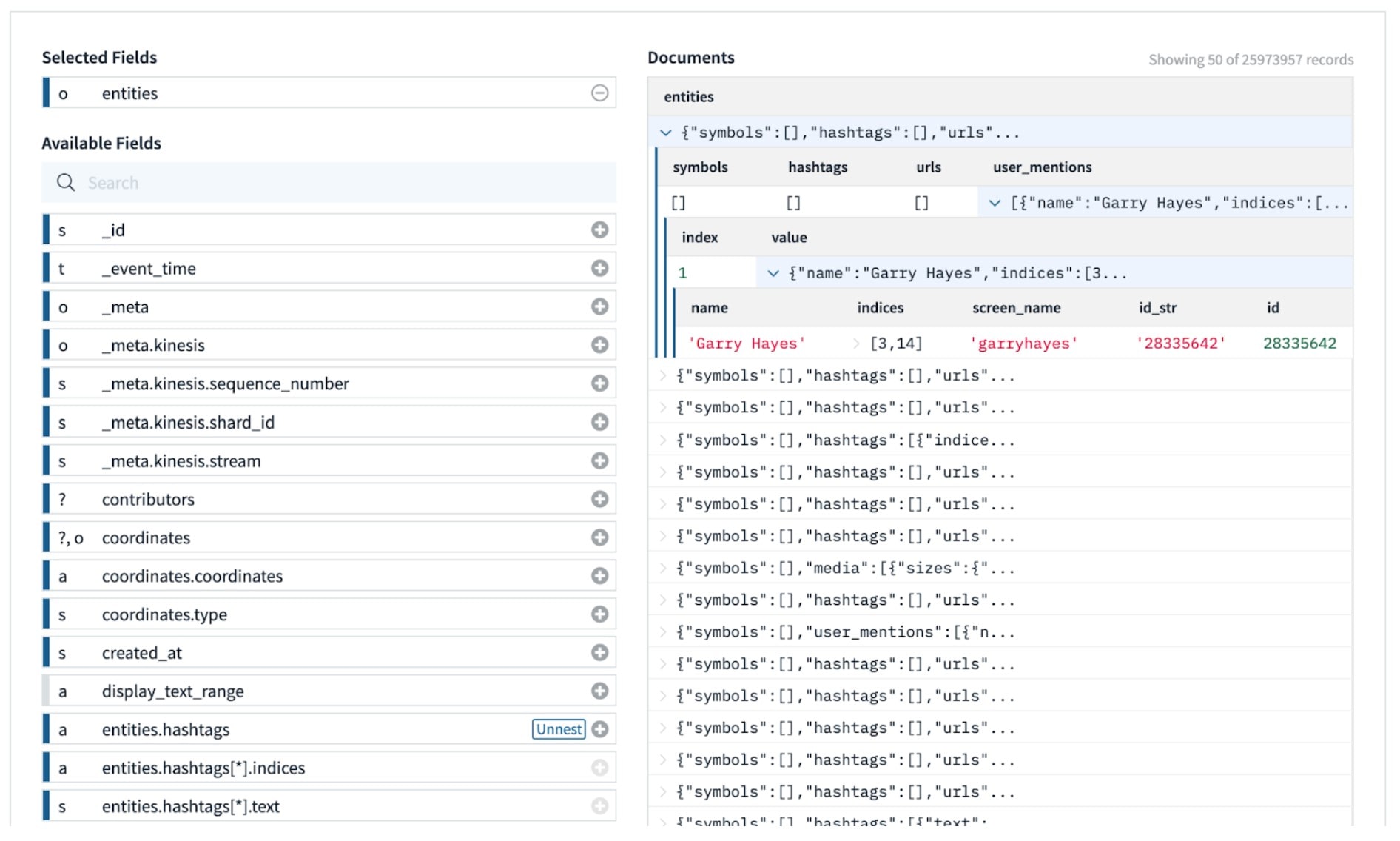

Rockset indexes every individual field without the user having to perform any manual specification. There is no requirement to flatten nested objects or arrays at ingestion time. An example of how nested objects and arrays are presented in Rockset is shown below:

If your need is for flat data ingestion, then Druid may be an appropriate choice. If you need deeply nested data, nested arrays, or real-time results from normalized data, consider a database like Rockset instead. Learn more about how Rockset and Druid compare.